Key takeaways:

- What is the Model Context Protocol (MCP)? MCP is a standardized integration layer that connects AI models with external tools and data sources for real-time decision making.

- How does MCP improve AI performance? MCP reduces AI isolation by allowing models to access live data and perform coordinated tasks, enhancing their business value.

- Why is MCP important for enterprises? It streamlines workflows and reduces the complexity of custom integrations, enabling scalable, context-aware AI deployment.

- What challenges are associated with MCP? It requires robust oversight to mitigate risks like unintended automation, unauthorized access, and compliance issues.

- What are real-world examples of MCP in action? Organizations like Microsoft and Wrike utilize MCP to enhance AI functionalities and integrate real-time project data seamlessly.

You’ve invested in AI. The tools are in place. But your systems still can’t talk to each other — and your results don’t match the hype.

That disconnect is the silent blocker for many enterprises today. While AI models are getting smarter, most are stuck working in silos, unable to access the relevant context they need to deliver real business value.

That’s where the Model Context Protocol (MCP) comes in. Think of it as the USB-C of AI. It’s a standardized integration layer that seamlessly connects AI tools with the external data sources and applications they need to be truly useful.

Whether you’re a CIO rethinking architecture or an operations leader streamlining delivery, MCP is the key to unlocking the next phase of AI-powered tools.

What is Model Context Protocol (MCP)?

Model Context Protocol (MCP) is an open, standardized integration layer that connects AI models to external tools, data sources, and services, enabling real-time, context-aware decision making.

Traditional AI applications are often limited by their training data — what they’ve seen before is all they can use.

MCP, however, creates a standard interface for AI assistants and autonomous agents to communicate with application programming interfaces (APIs) and perform coordinated tasks.

Think of MCP like a skilled translator in a global business meeting. Rather than building a new translator for every conversation, it ensures that every participant (AI model, tool, or data source) can understand and interact with one another seamlessly, no matter their native “language.”

An MCP:

- Acts as a unified interface that connects AI models with tools, data, and services across your tech stack

- Lets AI models invoke and operate external tools (e.g., CRMs, spreadsheets, project trackers) in real time

- Powers multi-agent workflows where AI systems can collaborate, delegate tasks, and orchestrate outcomes

Whether surfacing insights from scattered documents, automating decisions across platforms, or coordinating AI-powered tools in dynamic workflows, MCP makes agentic AI practical.

The problem MCP solves

Today’s large language models (LLMs) are trained on massive datasets, but once deployed, they operate in isolation. They hallucinate and rely on stale training data. They can’t access live dashboards, internal platforms, or enterprise applications.

As Anthropic puts it:

“Even the most sophisticated models are constrained by their isolation from data — trapped behind information silos and legacy systems.”

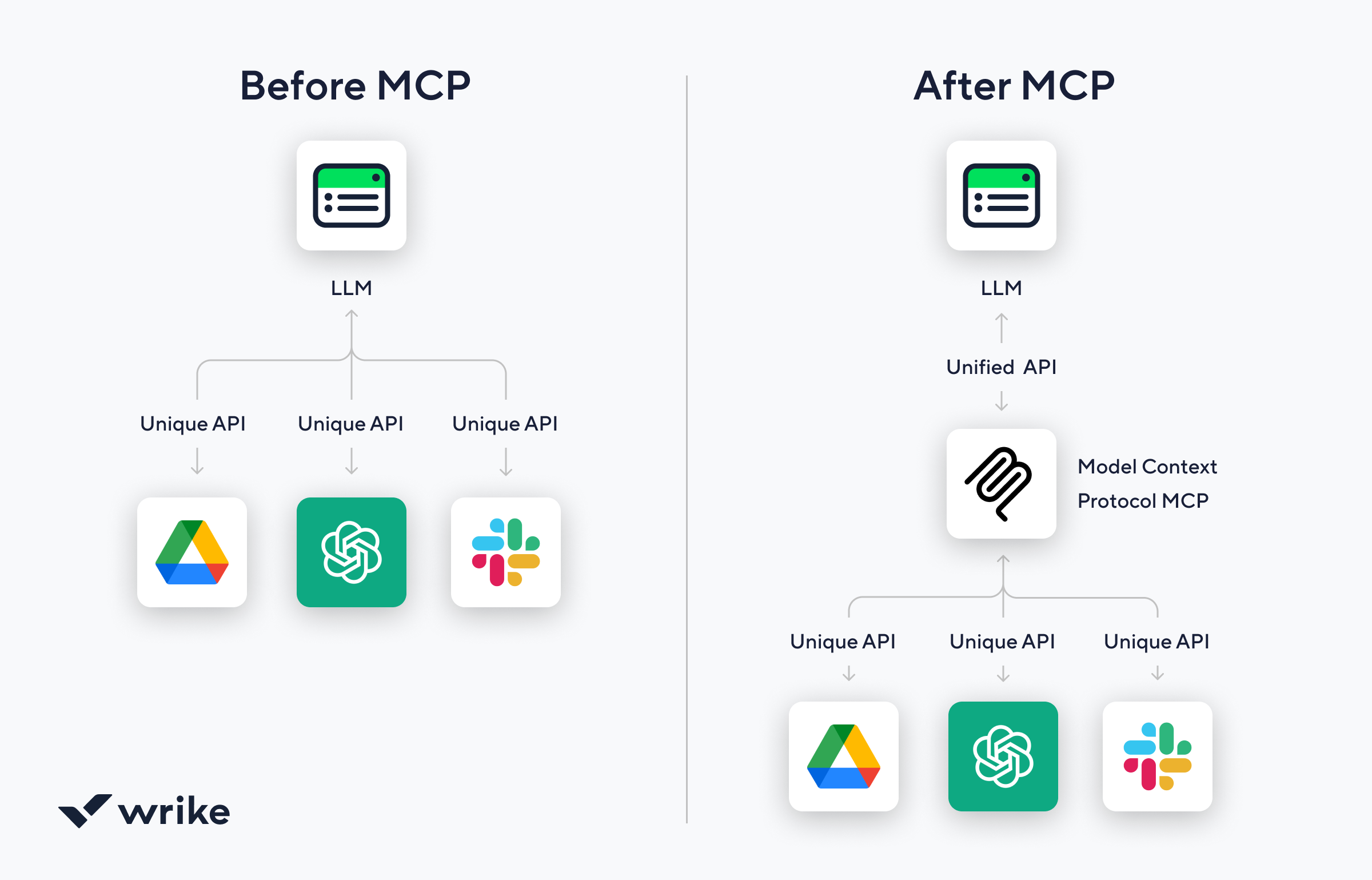

Here’s the core issue: Connecting AI to your business often means building custom integrations. Every time an agent needs to perform an action, developers are forced to wire up fragile, one-off connectors. This is time-consuming and impossible to scale.

Imagine trying to power a smart home where every device needs a different kind of socket and wiring. You’d spend more time retrofitting connections than actually using the system.

MCP is the standardized wiring that lets all types of AI agents plug into enterprise data, external data sources, and critical AI tools in a structured way. It’s not the smart home device itself; the infrastructure makes everything work together.

MCP vs. RAG vs. AI agents

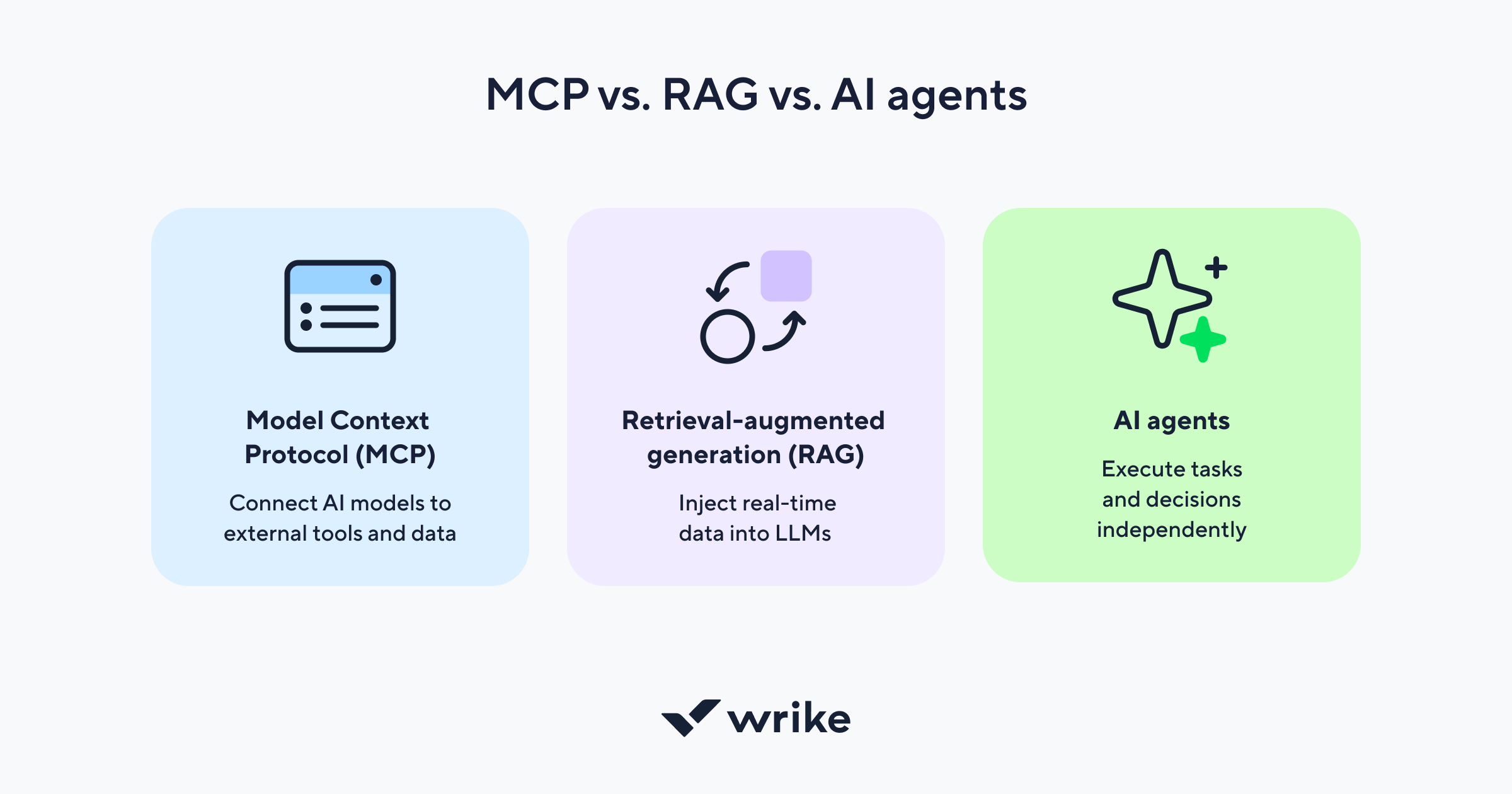

As AI adoption accelerates, three foundational components shape how organizations connect intelligent systems to real work: Model Context Protocol (MCP), retrieval-augmented generation (RAG), and AI agents.

Here’s how they compare:

| Feature | MCP | RAG | AI agents |

| Purpose | Standardize tool and data access for AI models and agents | Improve model responses by injecting external, up-to-date data | Autonomously perform tasks and make decisions across workflows |

| Autonomy | None — MCP is an enabler | None — supports generative models | High — agents act autonomously with minimal input |

| How it works | Defines a context protocol between AI models and external tools | Pulls in relevant documents from external data sources to inform answers | Combines reasoning, planning, and tool execution to take action |

| Context handling | Structured integration of relevant context into models | Supplements model training data with dynamic reference materials | Uses context and feedback to act in complex environments |

| Real-time ability | Yes, enables real-time tool execution and data flow | Limited, typically read-only information retrieval | Yes, agentic AI operates in real time to update and adapt |

| Security and governance | Centralized, easier to govern and audit API/tool access through MCP | Decentralized; less control over what’s retrieved | Varies — needs oversight to avoid unintended actions |

| Examples in workflow | Allowing AI tools to submit forms, trigger actions, or connect to project systems | Searching a knowledge base or CRM to improve a chatbot response | An AI assistant that adjusts project timelines or reallocates resources autonomously |

In short:

- MCP provides the connective tissue between models and action.

- RAG enhances what a model knows.

- AI agents use both to act with intelligence.

This layered understanding is crucial for decision makers planning AI deployments beyond demos, and into production environments that demand scalability and context-aware AI.

MCP architecture

Ever wonder how AI agents actually connect to tools and data behind the scenes? At its core, MCP follows a client-server architecture that supports seamless interaction between agents, tools, and data environments. Here’s how each component fits into the broader MCP ecosystem and enables next-generation AI applications.

MCP servers

The MCP server is the coordination hub — think of it as the “brain” of the model context protocol. It orchestrates communication across tools, agents, and data sources, ensuring the correct information is delivered at the right time.

Just like your brain interprets goals (“I want to pick up that coffee mug”) and sends instructions to your muscles, MCP servers coordinate agent actions, data access, and tool usage across workflows.

Without MCP servers, agents wouldn’t know where to look or what to do next.

Larger organizations may run multiple MCP servers in parallel or deploy new MCP server implementations to scale across departments. These Model Context Protocol Servers form the foundation for stable, secure agentic AI workflows.

MCP hosts

MCP hosts act as the middleware layer that facilitates secure communication between agents and the tools they rely on. Positioned between the internal tools, external systems, and the agents, hosts ensure that data permissions are respected, APIs are invoked properly, and responses are interpreted with minimal friction.

They are like the nerves and neural pathways connecting your brain to the rest of your body. They transmit messages securely and accurately, ensuring signals from the brain (server) reach the right muscles (clients).

MCP clients

The MCP client is the endpoint where actions are carried out — whether it’s an AI app, a productivity tool, or the system interface of an AI assistant. Clients are responsible for connecting AI assistants to real-world workflows, allowing them to initiate tasks, retrieve files, or communicate with APIs.

The MCP clients are your muscles and hands — where the work happens. When your brain (server) sends a signal via the nervous system (host), your hands perform the task: gripping, typing, moving.

Similarly, MCP clients execute the AI agent’s instructions, like creating a task, sending an alert, or updating a dashboard.

Data sources

For AI agents to behave intelligently, they need real-world context. MCP enables access to internal and external data sources, including SaaS platforms, enterprise systems, and training data. This context includes everything from data stored in CRMs to external data pulled from APIs.

Data sources are like the senses and stored memories your brain uses to make decisions. Whether you’re recalling where you left your keys or reacting to a loud noise, your body constantly taps into internal memory and external input.

MCP enables agents to access real-time external data or records, the way your brain relies on your senses and memory to act appropriately.

Through MCP, agents can access data directly when needed, unlocking relevant context without custom-coding connections each time.

MCP protocol

The MCP protocol is a set of standardized rules that govern how information flows between AI agents, tools, and data sources. It ensures that every component in the MCP ecosystem can communicate clearly and securely, regardless of the underlying platform or system.

Think of it as the electrical signals in the nervous system — the invisible current that powers every interaction, ensuring the “body” of your AI stack responds instantly and correctly.

This open protocol removes the need for custom integration with every tool, reduces friction, and creates a consistent integration layer across AI platforms and enterprise environments.

Benefits of MCP

When your tools speak the same language, everything moves faster. MCP streamlines coordination so decisions happen in real time and teams can scale without the usual growing pains.

Here’s what that looks like in practice:

- Consistency at scale: Agents follow shared rules, reducing unexpected behavior across workflows.

- Reduced hallucinations: MCP connects AI models to real, trusted data sources, minimizing inaccurate or fabricated responses.

- Enhanced multi-agent orchestration: MCP streamlines how multiple AI agents coordinate across tools and systems.

- Personalized interactions: With access to user-specific context, MCP enables more tailored, relevant outputs from AI agents.

- Reduced development time: Standardized integrations eliminate redundant builds.

Challenges of MCP

Leaders can’t just flip the switch and expect instant transformation. Like any major infrastructure overhaul, embracing MCP demands tight, robust oversight and a strong foundation of trust to keep everything on track.

Here are a few key hurdles to keep in mind:

- Unintended automation: AI agents may act on incomplete or misinterpreted data via MCP without strict guardrails.

- Tool poisoning: Attackers may tamper with tool descriptions or metadata, misleading the model into performing unsafe operations or leaking sensitive data.

- Unauthorized access: Poorly secured MCP servers may grant excessive access to systems or data, increasing the risk of insider threats and data breaches.

- Security risks: Expanding tool access increases the risk of misused permissions and cybersecurity breaches.

- Compliance risks: Improper handling of regulated data (e.g., personal or health information) through MCP may violate laws like GDPR or HIPAA.

Examples of how MCP helps

Wondering what MCP looks like in action? Here’s how organizations are using it right now:

By integrating native MCP support, Windows created a secure ecosystem for AI agents. MCP servers can now access the file system and system services, enabling agents (like Perplexity on Windows) to perform document searches using natural language requests with user permission.

Claude’s “tool use” feature, based on MCP, is now generally available. It allows Claude to call external APIs and perform tasks like querying live data, manipulating documents, and executing workflows dynamically.

Clarifai recently released support for MCP in version 11.5, enabling developers to build and host custom MCP servers. With this update, teams can connect internal tools through a standardized interface. This unlocks real-time decision making and automated data processing powered by AI agents.

Wrike’s MCP server bridges the gap between AI agents and live project data. Connecting agentic AI directly to Wrike’s platform enables real-time tool execution, task coordination, and dynamic updates across collaborative workspaces.

Using MCP for agentic workflows

While real-world examples prove MCP’s growing relevance, let’s zoom in on how MCP enables agentic workflows. Here’s how enterprise teams are using MCP to bring workflows to life:

In IT and operations, every second counts. MCP lets AI models monitor live system logs and automatically connect to ticketing tools, chat systems, and documentation. When an issue occurs:

- Agents pull incident details from tracking dashboards

- MCP connects them to issue trackers

- Agents escalate issues, tag owners, and summarize relevant fixes

Marketing teams work across design tools, content platforms, and project trackers. With MCP:

- AI agents gather campaign objectives from briefing templates

- They retrieve assets from and check deadlines in real time

- Campaigns get delivered faster, with agents coordinating reviews, managing dependencies, and updating stakeholders

In large organizations, aligning efforts across departments is no small feat. Using MCP:

- Autonomous agents tap into calendars, task boards, and shared resources

- They surface blockers, adjust timelines, and connect AI to updates from external tools like Google Sheets, Salesforce, or internal portals

- Teams stay on track even when projects span multiple toolsets

Explore Wrike’s MCP Server

Training smarter AI is only half the battle. The real edge? Giving those models the context they need to make meaningful decisions. Wrike MCP Server provides a standardized integration layer that connects AI agents to real-time work data in the platform.

With Wrike MCP Server, organizations can:

- Connect AI to existing workflows across tools and teams

- Power context-aware AI with real-time project data

- Enable agents to execute work across Wrike, integrations, and external systems

- Reduce complexity by using a centralized protocol rather than one-off custom integrations

Whether you’re piloting your first AI initiative or scaling intelligent workflows across the enterprise, Wrike MCP Server gives your AI the power to move from insight to impact.

FAQs

What is MCP in AI?

MCP, or Model Context Protocol, is a standardized method that allows AI models and agents to access external tools, data sources, and services in real time, giving them the context they need to take intelligent, autonomous action.

Why is MCP important for AI workflows?

MCP eliminates information silos by giving AI access to live, relevant context. This allows teams to streamline workflows, reduce manual steps, and enable more intelligent automation across systems.

Can MCP be used with internal enterprise systems?

Yes. MCP connects AI models with external and internal tools, including legacy platforms and enterprise applications. It supports secure, scalable integration across diverse tech stacks.

Why is MCP such a big deal?

MCP unlocks a new level of AI capability by giving it access to real-time data, tools, and context beyond its training. Instead of working in isolation, AI models can now take meaningful action across platforms.

What does MCP allow you to do that you couldn’t do before?

Before MCP, integrating AI with enterprise tools often required brittle, one-off solutions. Now, teams can connect AI models to multiple systems through a standardized protocol.